Introduction

This guide provides a comprehensive walkthrough for installing and configuring Kubernetes on Fedora 40, utilizing the 5W1H approach (What, Who, Where, When, Why, How), followed by an analysis of the consequences and a conclusion.

Overview

What

Kubernetes (K8s) is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. In this guide, we will cover the steps to install Kubernetes on Fedora 40.

Who

This guide is intended for system administrators, developers, and IT professionals who want to deploy and manage containerized applications using Kubernetes on Fedora 40.

Where

The installation process should be performed on a Fedora 40 system with administrative privileges.

When

You should consider installing Kubernetes when you need a robust solution to automate the deployment, scaling, and operations of application containers across clusters of hosts.

Why

The advantages and disadvantages of using Kubernetes for container orchestration on Fedora 40 are summarized in the table below.

| Pros | Cons |

|---|---|

| Highly scalable and efficient | Steep learning curve |

| Supports automation and self-healing | Complex to set up and manage |

| Extensive ecosystem and community support | High resource consumption |

| Flexible and portable | Potential compatibility issues with some applications |

How

Follow these steps to install and configure Kubernetes on Fedora 40:

| Step 1 | Update your Fedora 40 system: sudo dnf update |

| Step 2 | Install Kubernetes packages: sudo dnf install kubernetes |

| Step 3 | Enable and start the kubelet service: sudo systemctl enable --now kubelet |

| Step 4 | Initialize Kubernetes on the master node: sudo kubeadm init |

| Step 5 | Set up a pod network for communication: kubectl apply -f [network-config.yaml] |

| Step 6 | Join worker nodes to the cluster using the join command provided by kubeadm init. |

| Step 7 | Deploy your first application: kubectl create -f [application.yaml] |

Consequences

The successful installation and configuration of Kubernetes on Fedora 40 will enable you to manage containerized applications at scale. However, improper setup or configuration might lead to issues with cluster stability and application deployment.

| Positive |

|

| Negative |

|

Conclusion

Installing Kubernetes on Fedora 40 provides a powerful and scalable solution for managing containerized applications. By following the outlined steps and understanding the pros and cons, you can effectively deploy and manage your applications tailored to your needs.

Install Kubeadm

Install Kubeadm to Configure Multi Nodes Kubernetes Cluster. This example is based on the environment like follows. For System requirements, each Node has unique Hostname, MAC address, Product_uuid. MAC address and Product_uuid are generally already unique one if you installed OS on physical machine or virtual machine with common procedure. You can see Product_uuid with the command [dmidecode -s system-uuid]. Furthermore, it based on the environment Firewalld is disabled.

-----------+---------------------------+--------------------------+------------

| | |

eth0|10.0.0.30 eth0|10.0.0.51 eth0|10.0.0.52

+----------+-----------+ +-----------+------------+ +---------+--------------+

| [ dlp.bizantum.lab ] | |[ node01.bizantum.lab ] | | [ node02.bizantum.lab ]|

| Control Plane | | Worker Node | | Worker Node |

+----------------------+ +------------------------+ +------------------------+

Step [1]On all Nodes, Change settings for System requirements.

[root@bizantum ~]# cat > /etc/sysctl.d/99-k8s-cri.conf < /etc/modules-load.d/k8s.conf

[root@bizantum ~]# dnf -y install iptables-legacy

[root@bizantum ~]# alternatives --config iptables

There are 2 programs which provide 'iptables'.

Selection Command

-----------------------------------------------

*+ 1 /usr/sbin/iptables-nft

2 /usr/sbin/iptables-legacy

# switch to [iptables-legacy]

Enter to keep the current selection[+], or type selection number: 2

# set Swap off setting

[root@bizantum ~]# touch /etc/systemd/zram-generator.conf

# disable [firewalld]

[root@bizantum ~]# systemctl disable --now firewalld

# disable [systemd-resolved] (enabled by default)

[root@bizantum ~]# systemctl disable --now systemd-resolved

[root@bizantum ~]# vi /etc/NetworkManager/NetworkManager.conf

# add into [main] section

[main]

dns=default

[root@bizantum ~]# unlink /etc/resolv.conf

[root@bizantum ~]# touch /etc/resolv.conf

# restart to apply changes

[root@bizantum ~]# reboot

Step [2] On all Nodes, Install required packages. This example shows to use CRI-O for container runtime.

[root@bizantum ~]# dnf -y install cri-o

[root@bizantum ~]# systemctl enable --now crio

[root@bizantum ~]# dnf -y install kubernetes-kubeadm kubernetes-node kubernetes-client cri-tools iproute-tc container-selinux

[root@bizantum ~]# systemctl enable kubelet

Step [3]On all Nodes, if SELinux is enabled, change policy.

[root@bizantum ~]# vi k8s.te

# create new

module k8s 1.0;

require {

type cgroup_t;

type iptables_t;

class dir ioctl;

}

#============= iptables_t ==============

allow iptables_t cgroup_t:dir ioctl;

[root@bizantum ~]# checkmodule -m -M -o k8s.mod k8s.te

[root@bizantum ~]# semodule_package --outfile k8s.pp --module k8s.mod

[root@bizantum ~]# semodule -i k8s.pp

Configure Control Plane Node

Install Kubeadm to Configure Multi Nodes Kubernetes Cluster. This example is based on the environment like follows.

-----------+---------------------------+--------------------------+------------

| | |

eth0|10.0.0.30 eth0|10.0.0.51 eth0|10.0.0.52

+----------+-----------+ +-----------+------------+ +---------+---------------+

| [ dlp.bizantum.lab ] | | [ node01.bizantum.lab ]| | [ node02.bizantum.lab ] |

| Control Plane | | Worker Node | | Worker Node |

+----------------------+ +------------------------+ +-------------------------+

Step [1] Configure pre-requirements on all Nodes, refer to here.

Step [2] Configure initial setup on Control Plane Node. For [control-plane-endpoint], specify the Hostname or IP address that Etcd and Kubernetes API server are run. For [--pod-network-cidr] option, specify network which Pod Network uses. There are some plugins for Pod Network. (refer to details below) ⇒ https://kubernetes.io/docs/concepts/cluster-administration/networking/ On this example, it selects Calico.

[root@bizantum ~]# kubeadm init --control-plane-endpoint=10.0.0.30 --pod-network-cidr=192.168.0.0/16 --cri-socket=unix:///var/run/crio/crio.sock

[init] Using Kubernetes version: v1.29.4

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [dlp.bizantum.lab kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.0.30]

[certs] Generating "apiserver-kubelet-client" certificate and key

.....

.....

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 10.0.0.30:6443 --token katr7o.jdyxjkbljrtbubel \

--discovery-token-ca-cert-hash sha256:a0dc94201791381a68ee3b051844843b79b1002f718dfdea4a1b833cee72ff7b \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.30:6443 --token katr7o.jdyxjkbljrtbubel \

--discovery-token-ca-cert-hash sha256:a0dc94201791381a68ee3b051844843b79b1002f718dfdea4a1b833cee72ff7b

# set cluster admin user

# if you set common user as cluster admin, login with it and run [sudo cp/chown ***]

[root@bizantum ~]# mkdir -p $HOME/.kube

[root@bizantum ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@bizantum ~]# chown $(id -u):$(id -g) $HOME/.kube/config

Step [3]Configure Pod Network with Calico.

[root@bizantum ~]# wget https://raw.githubusercontent.com/projectcalico/calico/master/manifests/calico.yaml

[root@bizantum ~]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

serviceaccount/calico-cni-plugin created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

# show state : OK if STATUS = Ready

[root@bizantum ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

dlp.bizantum.lab Ready control-plane 2m58s v1.29.4

# show state : OK if all are Running

[root@bizantum ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-57758d645c-vpbq9 1/1 Running 0 78s

kube-system calico-node-w8qh2 1/1 Running 0 78s

kube-system coredns-76f75df574-kcqsv 1/1 Running 0 3m4s

kube-system coredns-76f75df574-wfgqz 1/1 Running 0 3m4s

kube-system etcd-dlp.bizantum.lab 1/1 Running 0 3m11s

kube-system kube-apiserver-dlp.bizantum.lab 1/1 Running 0 3m21s

kube-system kube-controller-manager-dlp.bizantum.lab 1/1 Running 0 3m14s

kube-system kube-proxy-h222g 1/1 Running 0 3m4s

kube-system kube-scheduler-dlp.bizantum.lab 1/1 Running 0 3m20s

Configure Worker Node

Install Kubeadm to Configure Multi Nodes Kubernetes Cluster. This example is based on the environment like follows.

-----------+---------------------------+--------------------------+------------

| | |

eth0|10.0.0.30 eth0|10.0.0.51 eth0|10.0.0.52

+----------+-----------+ +-----------+------------+ +---------+---------------+

| [ dlp.bizantum.lab ] | | [ node01.bizantum.lab ]| | [ node02.bizantum.lab ] |

| Control Plane | | Worker Node | | Worker Node |

+----------------------+ +------------------------+ +-------------------------+

Step [1]Configure pre-requirements on all Nodes, refer to here.

Step [2] Join in Kubernetes Cluster which is initialized on Control Plane Node. The command for joining is just the one [kubeadm join ***] which was shown on the bottom of the results on initial setup of Cluster.

[root@node01 ~]# kubeadm join 10.0.0.30:6443 --token katr7o.jdyxjkbljrtbubel \

--discovery-token-ca-cert-hash sha256:a0dc94201791381a68ee3b051844843b79b1002f718dfdea4a1b833cee72ff7b

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

# OK if [This node has joined the cluster]

Step [3]Verify Status on Control Plane Node. That's Ok if all STATUS are Ready.

[root@bizantum ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

dlp.bizantum.lab Ready control-plane 6m54s v1.29.4

node01.bizantum.lab Ready <none> 62s v1.29.4

node02.bizantum.lab Ready <none> 24s v1.29.4

Deploy Pods

This is the basic operation to create pods and others on Kubernetes Cluster.

Step [1]Create or Delete Pods.

# run [test-nginx] pods

[root@bizantum ~]# kubectl create deployment test-nginx --image=nginx

deployment.apps/test-nginx created

[root@bizantum ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

test-nginx-f5c579c7d-mpvbm 1/1 Running 0 13s

# show environment variable for [test-nginx] pod

[root@bizantum ~]# kubectl exec test-nginx-f5c579c7d-mpvbm -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

TERM=xterm

HOSTNAME=test-nginx-f5c579c7d-mpvbm

KUBERNETES_SERVICE_HOST=10.96.0.1

KUBERNETES_SERVICE_PORT=443

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

NGINX_VERSION=1.25.5

NJS_VERSION=0.8.4

NJS_RELEASE=3~bookworm

PKG_RELEASE=1~bookworm

HOME=/root

# shell access to [test-nginx] pod

[root@bizantum ~]# kubectl exec -it test-nginx-f5c579c7d-mpvbm -- bash

root@test-nginx-f5c579c7d-mpvbm:/# hostname

test-nginx-f5c579c7d-mpvbm

root@test-nginx-f5c579c7d-mpvbm:/# exit

# show logs of [test-nginx] pod

[root@bizantum ~]# kubectl logs test-nginx-5ccf576fbd-255n2

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2024/05/13 01:01:39 [notice] 1#1: using the "epoll" event method

2024/05/13 01:01:39 [notice] 1#1: nginx/1.25.5

2024/05/13 01:01:39 [notice] 1#1: built by gcc 12.2.0 (Debian 12.2.0-14)

2024/05/13 01:01:39 [notice] 1#1: OS: Linux 6.8.7-300.fc40.x86_64

2024/05/13 01:01:39 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2024/05/13 01:01:39 [notice] 1#1: start worker processes

.....

.....

# scale out pods

[root@bizantum ~]# kubectl scale deployment test-nginx --replicas=3

deployment.extensions/test-nginx scaled

[root@bizantum ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-nginx-f5c579c7d-9k9wp 1/1 Running 0 19s 192.168.40.194 node01.bizantum.lab <none> <none>

test-nginx-f5c579c7d-k8ktb 1/1 Running 0 19s 192.168.241.129 node02.bizantum.lab <none> <none>

test-nginx-f5c579c7d-mpvbm 1/1 Running 0 4m50s 192.168.40.193 node01.bizantum.lab <none> <none>

# set service

[root@bizantum ~]# kubectl expose deployment test-nginx --type="NodePort" --port 80

service "test-nginx" exposed

[root@bizantum ~]# kubectl get services test-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

test-nginx NodePort 10.102.139.61 <none> 80:32763/TCP 22s

# verify accesses

[root@bizantum ~]# curl 10.102.139.61

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

.....

.....

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# delete service

[root@bizantum ~]# kubectl delete services test-nginx

service "test-nginx" deleted

# delete pods

[root@bizantum ~]# kubectl delete deployment test-nginx

deployment.extensions "test-nginx" deleted

Enable Dashboard

Enable Dashboard to manage Kubernetes Cluster on Web UI. This example is based on the environment like follows.

-----------+---------------------------+--------------------------+------------

| | |

eth0|10.0.0.30 eth0|10.0.0.51 eth0|10.0.0.52

+----------+-----------+ +-----------+------------+ +---------+---------------+

| [ dlp.bizantum.lab ] | | [ node01.bizantum.lab ]| | [ node02.bizantum.lab ] |

| Control Plane | | Worker Node | | Worker Node |

+----------------------+ +------------------------+ +-------------------------+

Step [1]Enable Dashboard on Control Plane Node.

[root@bizantum ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

Step [2]Add an account for Dashboard management.

[root@bizantum ~]# kubectl create serviceaccount -n kubernetes-dashboard admin-user

serviceaccount/admin-user created

[root@bizantum ~]# vi rbac.yml

# create new

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

[root@bizantum ~]# kubectl apply -f rbac.yml

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

# get security token of the account above

[root@bizantum ~]# kubectl -n kubernetes-dashboard create token admin-user

eyJhbGciOiJSUzI1Ni.....

# run kube-proxy

[root@bizantum ~]# kubectl proxy

Starting to serve on 127.0.0.1:8001

# if access from other client hosts, set port-forwarding

[root@bizantum ~]# kubectl port-forward -n kubernetes-dashboard service/kubernetes-dashboard --address 0.0.0.0 8443:443

Forwarding from 0.0.0.0:8443 -> 8443

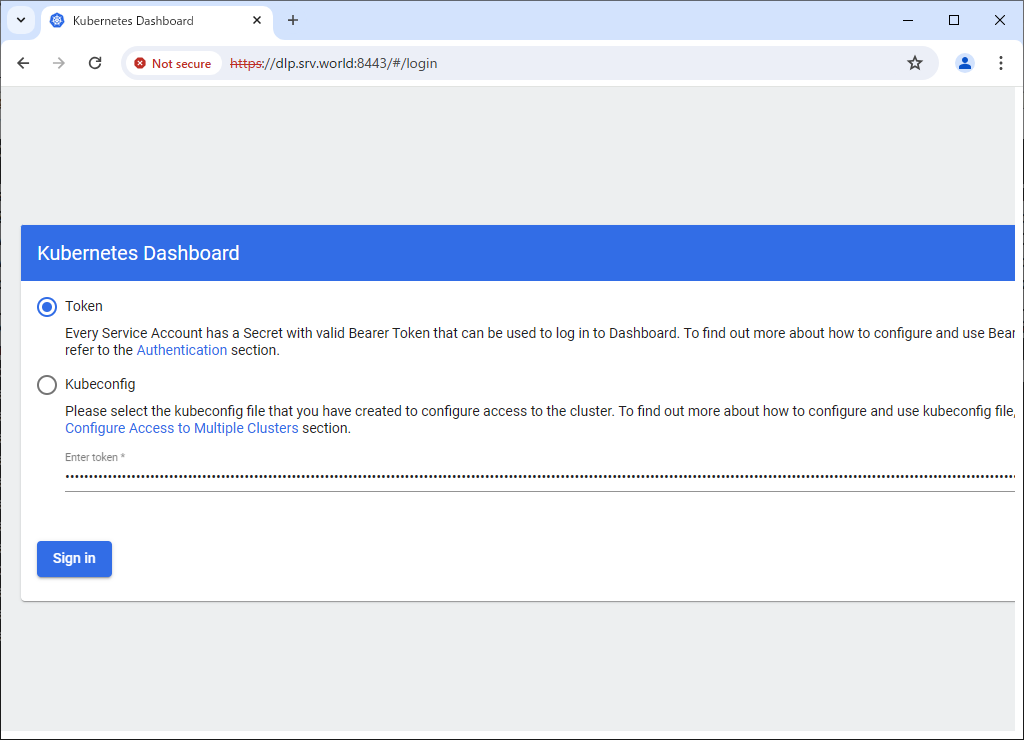

Step [3]If you run [kubectl proxy], access to the URL below with an Web browser on Localhost. ⇒ http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/ If you set port-forwarding, access to the URL below on a client computer in your local network. ⇒ https://(Control Plane Node Hostname or IP address):(setting port)/ After displaying following form, Copy and paste the security token you got on [2] to [Enter token] section and Click [Sing In] button.

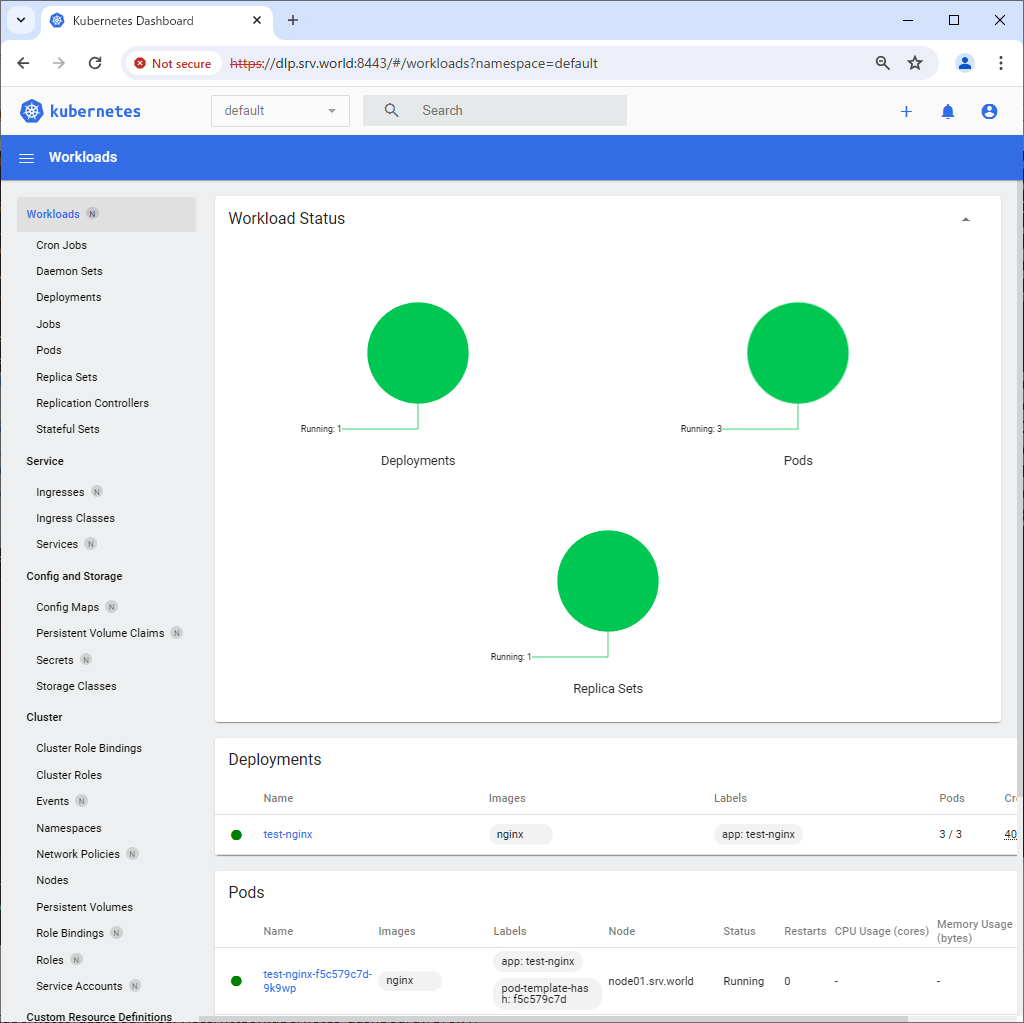

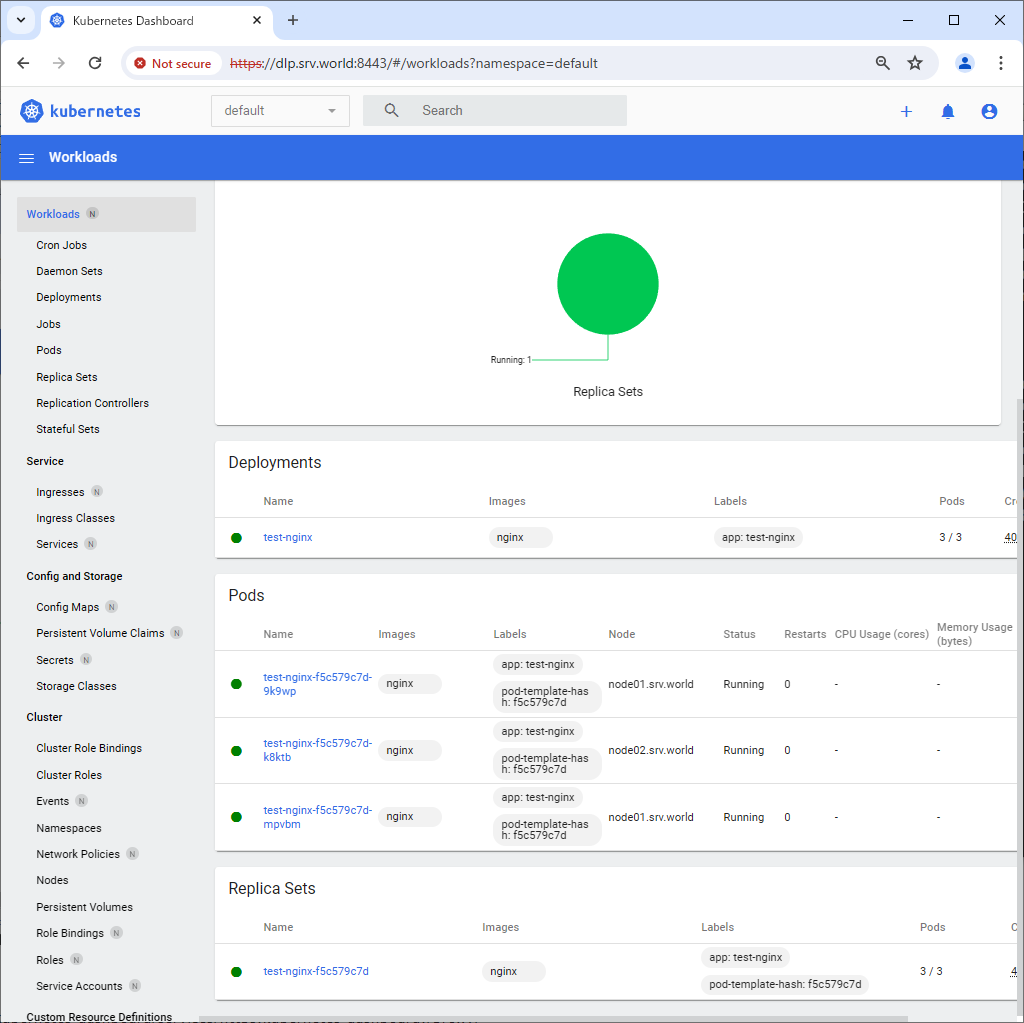

Step [3]After authentication successfully passed, Kubernetes Cluster Dashboard is displayed.

- Get link

- X

- Other Apps

Comments

Post a Comment

Thank you for your comment! We appreciate your feedback, feel free to check out more of our articles.

Best regards, Bizantum Blog Team.