Overview

What

MicroK8s is a lightweight, fast, and secure Kubernetes distribution designed for developing, testing, and running microservices and containerized applications.

Who

This guide is intended for developers, system administrators, and IT professionals who want to install and use MicroK8s on Debian 12 Bookworm.

Where

You can install MicroK8s on any machine running Debian 12 Bookworm, whether it's a physical machine or a virtual machine.

When

Install MicroK8s when you need a lightweight Kubernetes distribution for development, testing, or production environments.

Why

Using MicroK8s on Debian 12 Bookworm has several pros and cons:

| Pros | Cons |

|---|---|

|

|

How

Follow these steps to install MicroK8s on Debian 12 Bookworm:

| Step 1 | Update your system. |

| Step 2 | Install Snapd. |

| Step 3 | Enable Snapd. |

| Step 4 | Install MicroK8s. |

| Step 5 | Add your user to the MicroK8s group. |

| Step 6 | Activate essential MicroK8s services. |

Consequences

Installing and using MicroK8s on Debian 12 Bookworm can have several consequences:

| Positive |

|

| Negative |

|

Conclusion

MicroK8s is an excellent choice for those seeking a lightweight Kubernetes distribution for development and testing on Debian 12 Bookworm. While it may have some limitations, its ease of use and low resource consumption make it a valuable tool for many users.

Install and Pre-Configure

Install [MicroK8s] that is the Lightweight Kubernetes provided by Canonical.

Step [1]Install Snapd, refer to here

Step [2]Install MicroK8s from Snappy.

root@bizantum:~# snap install microk8s --classic

microk8s (1.27/stable) v1.27.2 from Canonical✓ installed

Step [3]root@bizantum:~# snap install microk8s --classic microk8s (1.27/stable) v1.27.2 from Canonical✓ installed

# show status

root@bizantum:~# microk8s status

microk8s is running

high-availability: no

datastore master nodes: 127.0.0.1:19001

datastore standby nodes: none

addons:

enabled:

dns # (core) CoreDNS

ha-cluster # (core) Configure high availability on the current node

helm # (core) Helm - the package manager for Kubernetes

helm3 # (core) Helm 3 - the package manager for Kubernetes

disabled:

cert-manager # (core) Cloud native certificate management

community # (core) The community addons repository

dashboard # (core) The Kubernetes dashboard

gpu # (core) Automatic enablement of Nvidia CUDA

host-access # (core) Allow Pods connecting to Host services smoothly

hostpath-storage # (core) Storage class; allocates storage from host directory

ingress # (core) Ingress controller for external access

kube-ovn # (core) An advanced network fabric for Kubernetes

mayastor # (core) OpenEBS MayaStor

metallb # (core) Loadbalancer for your Kubernetes cluster

metrics-server # (core) K8s Metrics Server for API access to service metrics

minio # (core) MinIO object storage

observability # (core) A lightweight observability stack for logs, traces and metrics

prometheus # (core) Prometheus operator for monitoring and logging

rbac # (core) Role-Based Access Control for authorisation

registry # (core) Private image registry exposed on localhost:32000

storage # (core) Alias to hostpath-storage add-on, deprecated

# show config

root@bizantum:~# microk8s config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tC...

server: https://10.0.0.30:16443

name: microk8s-cluster

contexts:

- context:

cluster: microk8s-cluster

user: admin

name: microk8s

current-context: microk8s

kind: Config

preferences: {}

users:

- name: admin

user:

token: Q1BOYnhLMFJUQUZZdkJaaWZCYWJGdGM5eGY1WUNJdmZKZ1A1UHdlLzdCTT0K

root@bizantum:~# microk8s kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 4m52s

root@bizantum:~# microk8s kubectl get nodes

NAME STATUS ROLES AGE VERSION

dlp.bizantum.lab Ready <none> 5m13s v1.27.2

# stop MicroK8s

root@bizantum:~# microk8s stop

Stopped.

root@bizantum:~# microk8s status

microk8s is not running, try microk8s start

# start MicroK8s

root@bizantum:~# microk8s start

Started.

# disable MicroK8s

root@bizantum:~# snap disable microk8s

microk8s disabled

# enable MicroK8s

root@bizantum:~# snap enable microk8s

microk8s enabled

Deploy Pods

This is the basic operation on MicroK8s.

Step [1]Create or Delete Pods.

# run [test-nginx] pods

root@bizantum:~# microk8s kubectl create deployment test-nginx --image=nginx

deployment.apps/test-nginx created

root@bizantum:~# microk8s kubectl get pods

NAME READY STATUS RESTARTS AGE

test-nginx-5ccf576fbd-zfhgs 1/1 Running 0 22s

# show environment variable for [test-nginx] pod

root@bizantum:~# microk8s kubectl exec test-nginx-5ccf576fbd-zfhgs -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=test-nginx-5ccf576fbd-zfhgs

NGINX_VERSION=1.25.1

NJS_VERSION=0.7.12

PKG_RELEASE=1~bookworm

KUBERNETES_PORT=tcp://10.152.183.1:443

KUBERNETES_PORT_443_TCP=tcp://10.152.183.1:443

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_ADDR=10.152.183.1

KUBERNETES_SERVICE_HOST=10.152.183.1

KUBERNETES_SERVICE_PORT=443

KUBERNETES_SERVICE_PORT_HTTPS=443

HOME=/root

# shell access to [test-nginx] pod

root@bizantum:~# microk8s kubectl exec -it test-nginx-5ccf576fbd-zfhgs -- bash

root@test-nginx-5ccf576fbd-zfhgs:/# hostname

test-nginx-5ccf576fbd-zfhgs

root@test-nginx-5ccf576fbd-zfhgs:/# exit

# show logs of [test-nginx] pod

root@bizantum:~# microk8s kubectl logs test-nginx-5ccf576fbd-zfhgs

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2023/06/22 03:44:07 [notice] 1#1: using the "epoll" event method

2023/06/22 03:44:07 [notice] 1#1: nginx/1.25.1

.....

.....

# scale out pods

root@bizantum:~# microk8s kubectl scale deployment test-nginx --replicas=3

deployment.extensions/test-nginx scaled

root@bizantum:~# microk8s kubectl get pods

NAME READY STATUS RESTARTS AGE

test-nginx-5ccf576fbd-zfhgs 1/1 Running 0 2m25s

test-nginx-5ccf576fbd-fzvjh 1/1 Running 0 6s

test-nginx-5ccf576fbd-bkplz 1/1 Running 0 6s

# set service

root@bizantum:~# microk8s kubectl expose deployment test-nginx --type="NodePort" --port 80

service "test-nginx" exposed

root@bizantum:~# microk8s kubectl get services test-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

test-nginx NodePort 10.152.183.245 <none> 80:30865/TCP 6s

# verify accesses

root@bizantum:~# curl 10.152.183.245

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

.....

.....

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# delete service

root@bizantum:~# microk8s kubectl delete services test-nginx

service "test-nginx" deleted

# delete pods

root@bizantum:~# microk8s kubectl delete deployment test-nginx

deployment.extensions "test-nginx" deleted

Add Nodes

Make sure commands to join in Cluster on primary Node.

Step [1]Make sure commands to join in Cluster on primary Node.

root@bizantum:~# microk8s add-node

From the node you wish to join to this cluster, run the following:

microk8s join 10.0.0.30:25000/f99f1625c1b0d0ca7601a63cf9d6fba5/ff0519fe1876

Use the '--worker' flag to join a node as a worker not running the control plane, eg:

microk8s join 10.0.0.30:25000/f99f1625c1b0d0ca7601a63cf9d6fba5/ff0519fe1876 --worker

If the node you are adding is not reachable through the default interface you can use one of the following:

microk8s join 10.0.0.30:25000/f99f1625c1b0d0ca7601a63cf9d6fba5/ff0519fe1876

Step [2]On a new Node, Install MicroK8s and join in Cluster.

root@node01:~# snap install microk8s --classic

microk8s (1.27/stable) v1.27.2 from Canonical✓ installed

# run the command confirmed in [1]

root@node01:~# microk8s join 10.0.0.30:25000/f99f1625c1b0d0ca7601a63cf9d6fba5/ff0519fe1876

Contacting cluster at 10.0.0.30

Waiting for this node to finish joining the cluster. .. .. .. ..

Step [3]After a few minutes on primary Node, Make sure a new Node has been added in Cluster.

root@bizantum:~# microk8s kubectl get nodes

NAME STATUS ROLES AGE VERSION

node01.bizantum.lab Ready <none> 86s v1.27.2

dlp.bizantum.lab Ready <none> 18m v1.27.2

Step [4]To remove a Node, Set like follows.

# leave from cluster on the target node first

root@node01:~# microk8s leave

Generating new cluster certificates. Waiting for node to start.

# on primary node, remove the target node

root@bizantum:~# microk8s remove-node node01.bizantum.lab

root@bizantum:~# microk8s kubectl get nodes

NAME STATUS ROLES AGE VERSION

dlp.bizantum.lab Ready <none> 20m v1.27.2

Enable Dashboard

To enable Dashboard on MicroK8s Cluster, Configure like follows.

Step [1]Enable Dashboard add-on on primary Node.

root@bizantum:~# microk8s enable dashboard

Infer repository core for addon dashboard

Enabling Kubernetes Dashboard

Infer repository core for addon metrics-server

Addon core/metrics-server is already enabled

Applying manifest

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

secret/microk8s-dashboard-token unchanged

If RBAC is not enabled access the dashboard using the token retrieved with:

microk8s kubectl describe secret -n kube-system microk8s-dashboard-token

Use this token in the https login UI of the kubernetes-dashboard service.

In an RBAC enabled setup (microk8s enable RBAC) you need to create a user with restricted

permissions as shown in:

https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

root@bizantum:~# microk8s enable dns

Infer repository core for addon dns

Enabling DNS

Using host configuration from /etc/resolv.conf

Applying manifest

serviceaccount/coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

clusterrole.rbac.authorization.k8s.io/coredns created

clusterrolebinding.rbac.authorization.k8s.io/coredns created

Adding argument --cluster-domain to nodes.

Adding argument --cluster-dns to nodes.

Restarting kubelet

Restarting nodes.

DNS is enabled

root@bizantum:~# microk8s kubectl get services -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

metrics-server ClusterIP 10.152.183.235 <none> 443/TCP 3m47s

kubernetes-dashboard ClusterIP 10.152.183.35 <none> 443/TCP 2m1s

dashboard-metrics-scraper ClusterIP 10.152.183.115 <none> 8000/TCP 2m1s

kube-dns ClusterIP 10.152.183.10 <none> 53/UDP,53/TCP,9153/TCP 106s

# confirm security token

root@bizantum:~# microk8s config | grep token

token: Q1BOYnhLMFJUQUZZdkJaaWZCYWJGdGM5eGY1WUNJdmZKZ1A1UHdlLzdCTT0K

# set port-forwarding to enable external access if you need

root@bizantum:~# microk8s kubectl port-forward -n kube-system service/kubernetes-dashboard --address 0.0.0.0 10443:443

Forwarding from 0.0.0.0:10443 -> 8443

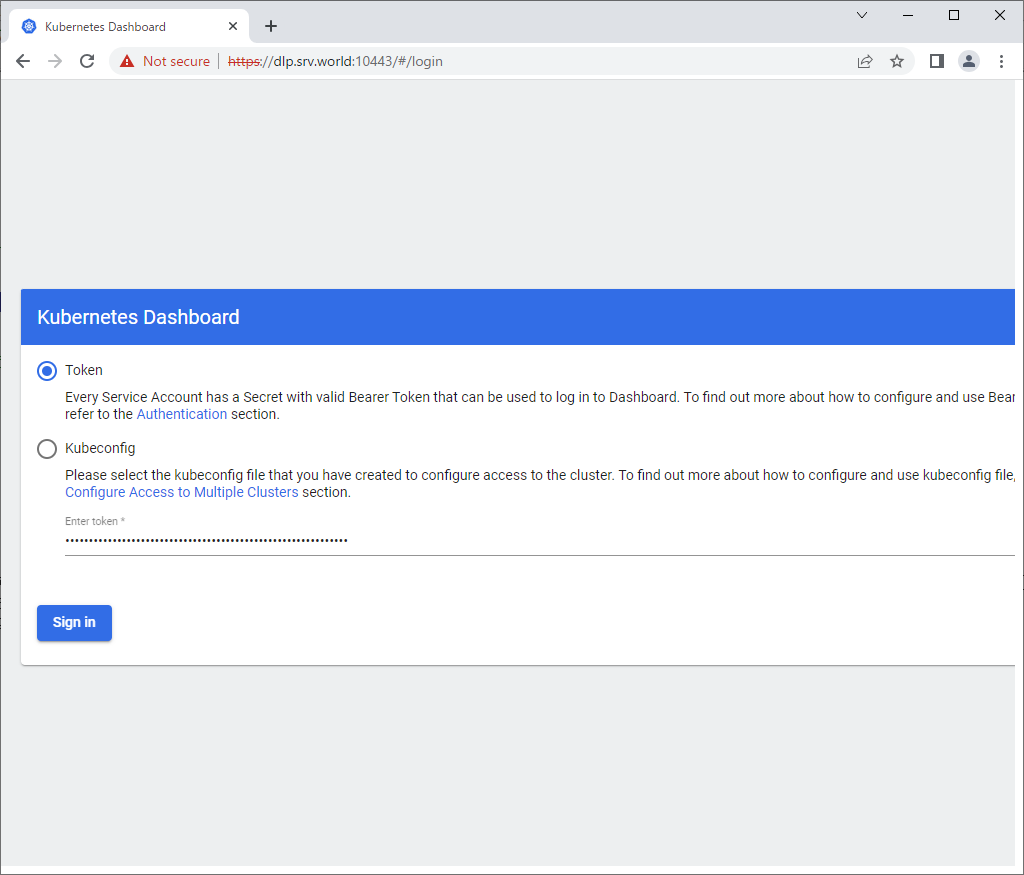

Step [2]Access to [https://(MicroK8s primary node's Hostname or IP address):10443/] with an web browser on any Client computer in local network. Copy and paste the security token you confirmed on [1] to [Enter token] section and Click [Sing In] button.

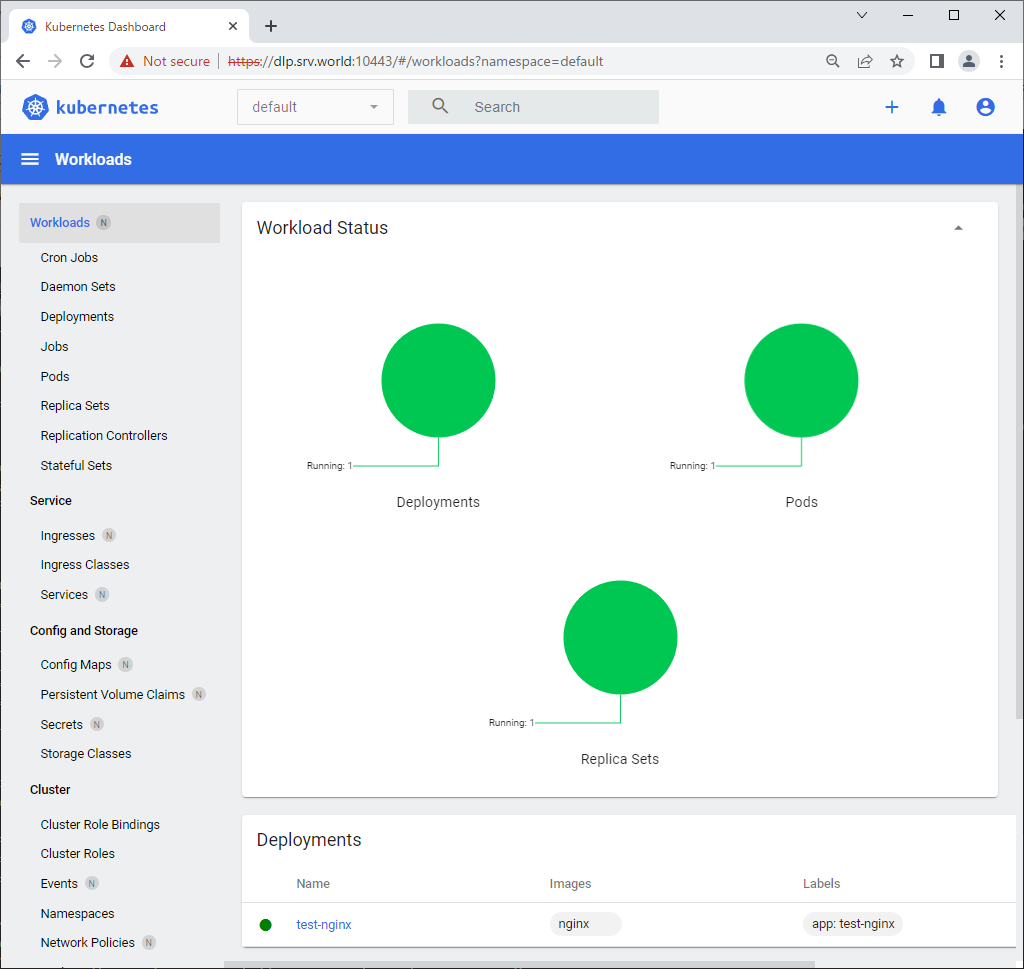

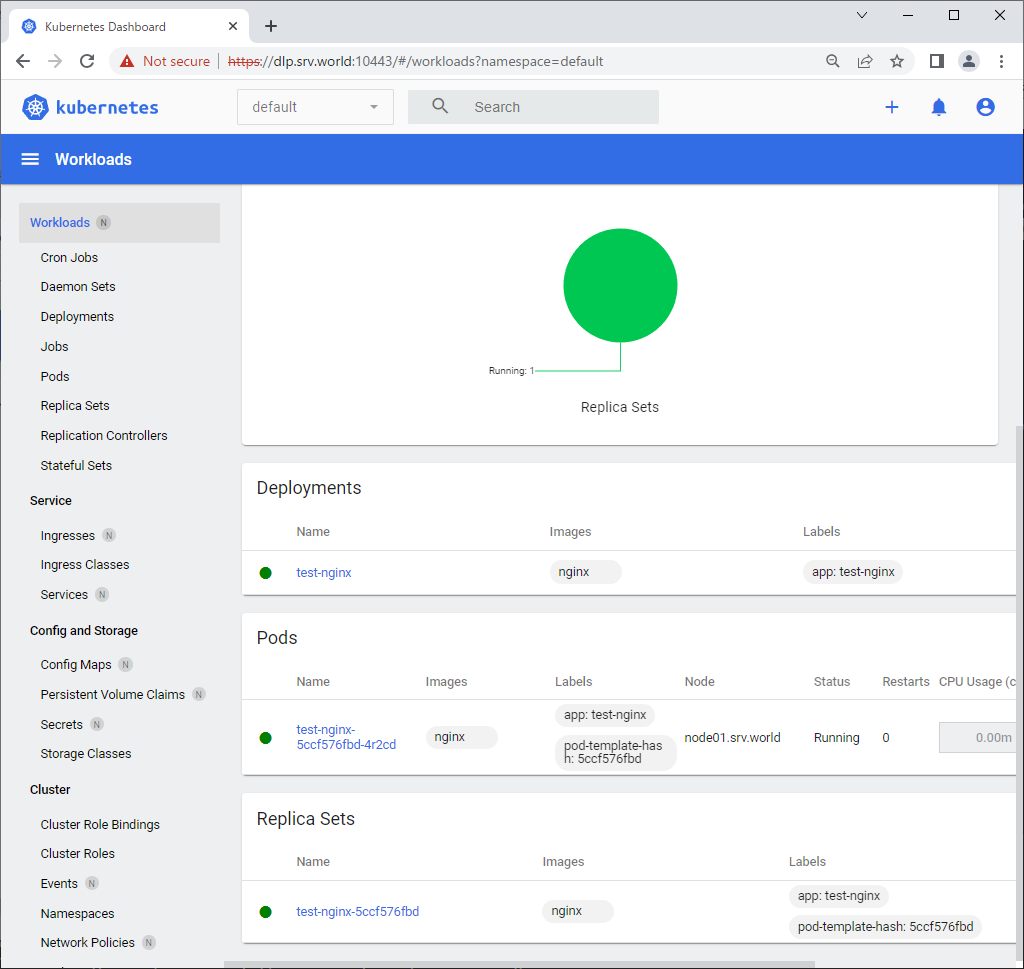

Step [3]After authentication successfully passed, MicroK8s Cluster Dashboard is displayed.

Use External Storage

Configure External Storage in MicroK8s. For example, it uses NFS server for it.

Step [1]Configure NFS Server on any node, refer to here. On ths example, it uses [/home/nfsshare] directory on NFS Server that is running on [nfs.bizantum.lab (10.0.0.35)].

Step [2]Create PV (Persistent Volume) object and PVC (Persistent Volume Claim) object.

# create PV definition

root@bizantum:~# vi nfs-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

# any PV name

name: nfs-pv

spec:

capacity:

# storage size

storage: 10Gi

accessModes:

# Access Modes:

# - ReadWriteMany (RW from multi nodes)

# - ReadWriteOnce (RW from a node)

# - ReadOnlyMany (R from multi nodes)

- ReadWriteMany

persistentVolumeReclaimPolicy:

# retain even if pods terminate

Retain

storageClassName: nfs-server

nfs:

# NFS server's definition

path: /home/nfsshare

server: 10.0.0.35

readOnly: false

root@bizantum:~# microk8s kubectl apply -f nfs-pv.yml

persistentvolume "nfs-pv" created

root@bizantum:~# microk8s kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv 10Gi RWX Retain Available nfs-server 5s

# create PVC definition

root@bizantum:~# vi nfs-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

# any PVC name

name: nfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: nfs-server

root@bizantum:~# microk8s kubectl apply -f nfs-pvc.yml

persistentvolumeclaim "nfs-pvc" created

root@bizantum:~# microk8s kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs-pvc Bound nfs-pv 10Gi RWX nfs-server 5s

Step [3]Create Pods with PVC above.

root@bizantum:~# vi nginx-nfs.yml

apiVersion: apps/v1

kind: Deployment

metadata:

# any Deployment name

name: nginx-nfs

labels:

name: nginx-nfs

spec:

replicas: 3

selector:

matchLabels:

app: nginx-nfs

template:

metadata:

labels:

app: nginx-nfs

spec:

containers:

- name: nginx-nfs

image: nginx

ports:

- name: web

containerPort: 80

volumeMounts:

- name: nfs-share

# mount point in container

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-share

persistentVolumeClaim:

# PVC name you created

claimName: nfs-pvc

root@bizantum:~# microk8s kubectl apply -f nginx-nfs.yml

deployment.apps/nginx-nfs created

root@bizantum:~# microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-nfs-5bb7fbc8fb-mhs5n 1/1 Running 0 3m25s 10.1.142.73 dlp.bizantum.lab <none> <none>

nginx-nfs-5bb7fbc8fb-vxkzd 1/1 Running 0 3m25s 10.1.40.197 node01.bizantum.lab <none> <none>

nginx-nfs-5bb7fbc8fb-v9fx9 1/1 Running 0 3m25s 10.1.40.198 node01.bizantum.lab <none> <none>

root@bizantum:~# microk8s kubectl expose deployment nginx-nfs --type="NodePort" --port 80

service/nginx-nfs exposed

root@bizantum:~# microk8s kubectl get service nginx-nfs

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-nfs NodePort 10.152.183.161 <none> 80:30887/TCP 6s

# on NFS server, create a test file under the NFS shared directory

root@nfs:~# echo 'NFS Persistent Storage Test' > /home/nfsshare/index.html

# verify accesses on a node which users can access to microk8s cluster

root@bizantum:~# curl 10.152.183.161

NFS Persistent Storage Test

Enable Registry

To enable MicroK8s built-in Registry, Configure like follows.

Step [1] Enable built-in Registry on primary Node. After enabling, [kube-proxy] listens on [0.0.0.0:32000].

# enable registry with backend storage size 30G

# default size is 20G if it's not specified

# possible to specify the size on MicroK8s 1.18.3 or later

root@bizantum:~# microk8s enable registry:size=30Gi

Infer repository core for addon registry

Infer repository core for addon hostpath-storage

Enabling default storage class.

WARNING: Hostpath storage is not suitable for production environments.

A hostpath volume can grow beyond the size limit set in the volume claim manifest.

deployment.apps/hostpath-provisioner created

storageclass.storage.k8s.io/microk8s-hostpath created

serviceaccount/microk8s-hostpath created

clusterrole.rbac.authorization.k8s.io/microk8s-hostpath created

clusterrolebinding.rbac.authorization.k8s.io/microk8s-hostpath created

Storage will be available soon.

WARNING: This style of specifying size is deprecated. Use newer --size argument instead.

The registry will be created with the size of 30Gi.

Default storage class will be used.

namespace/container-registry created

persistentvolumeclaim/registry-claim created

deployment.apps/registry created

service/registry created

configmap/local-registry-hosting configured

# [registry] pod starts

root@bizantum:~# microk8s kubectl get pods -n container-registry

NAME READY STATUS RESTARTS AGE

registry-9865b655c-48vqk 1/1 Running 0 31s

Step [2]After enabling Registry, it's possible to use it with common operation.

root@bizantum:~# podman images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/library/debian latest 49081a1edb0b 9 days ago 121 MB

# tag and push

root@bizantum:~# podman tag 49081a1edb0b localhost:32000/debian:registry

root@bizantum:~# podman push localhost:32000/debian:registry --tls-verify=false

Getting image source signatures

Copying blob 332b199f36eb done

Copying config 49081a1edb done

Writing manifest to image destination

Storing signatures

root@bizantum:~# podman images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/library/debian latest 49081a1edb0b 9 days ago 121 MB

localhost:32000/debian registry 49081a1edb0b 9 days ago 121 MB

Enable Metrics Server

Enable Metrics Server add-on to monitor CPU and Memory resources in MicroK8s Cluster.

Step [1]Enable Metrics Server add-on to monitor CPU and Memory resources in MicroK8s Cluster.

root@bizantum:~# microk8s enable metrics-server

Infer repository core for addon metrics-server

Addon core/metrics-server is already enabled

root@bizantum:~# microk8s kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-5cb4f4bb9c-hf2tb 1/1 Running 0 80m

kubernetes-dashboard-fc86bcc89-cwsrr 1/1 Running 0 80m

hostpath-provisioner-58694c9f4b-69ghl 1/1 Running 0 55m

metrics-server-7747f8d66b-9d7fl 1/1 Running 0 83m

coredns-7745f9f87f-cvv9j 1/1 Running 0 80m

calico-kube-controllers-6c99c8747f-cw4jv 1/1 Running 0 104m

calico-node-6qjbd 1/1 Running 0 87m

calico-node-prlb7 1/1 Running 0 86m

# [metrics-server] pod has been deployed

Step [2]To display CPU and Memory resources, run like follows.

root@bizantum:~# microk8s kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

dlp.bizantum.lab 193m 2% 5196Mi 32%

node01.bizantum.lab 115m 1% 2815Mi 17%

root@bizantum:~# microk8s kubectl top pod

NAME CPU(cores) MEMORY(bytes)

wordpress-559b788c6b-5b86g 4m 154Mi

wordpress-mariadb-0 3m 169Mi

Enable Observability

Enable Observability add-on to monitor metrics on MicroK8s Cluster.

Step [1]Enable built-in Observability add-on on primary Node.

root@bizantum:~# microk8s enable observability

Infer repository core for addon observability

Addon core/dns is already enabled

Addon core/helm3 is already enabled

Addon core/hostpath-storage is already enabled

Enabling observability

Release "kube-prom-stack" does not exist. Installing it now.

NAME: kube-prom-stack

LAST DEPLOYED: Wed Jun 21 23:28:56 2023

NAMESPACE: observability

STATUS: deployed

REVISION: 1

NOTES:

kube-prometheus-stack has been installed. Check its status by running:

kubectl --namespace observability get pods -l "release=kube-prom-stack"

Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.

Release "loki" does not exist. Installing it now.

NAME: loki

LAST DEPLOYED: Wed Jun 21 23:29:24 2023

NAMESPACE: observability

STATUS: deployed

REVISION: 1

NOTES:

The Loki stack has been deployed to your cluster. Loki can now be added as a datasource in Grafana.

See http://docs.grafana.org/features/datasources/loki/ for more detail.

Release "tempo" does not exist. Installing it now.

NAME: tempo

LAST DEPLOYED: Wed Jun 21 23:29:26 2023

NAMESPACE: observability

STATUS: deployed

REVISION: 1

TEST SUITE: None

Adding argument --authentication-kubeconfig to nodes.

Adding argument --authorization-kubeconfig to nodes.

Restarting nodes.

Adding argument --authentication-kubeconfig to nodes.

Adding argument --authorization-kubeconfig to nodes.

Restarting nodes.

Adding argument --metrics-bind-address to nodes.

Restarting nodes.

Note: the observability stack is setup to monitor only the current nodes of the MicroK8s cluster.

For any nodes joining the cluster at a later stage this addon will need to be set up again.

Observability has been enabled (user/pass: admin/prom-operator)

root@bizantum:~# microk8s kubectl get services -n observability

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-prom-stack-kube-state-metrics ClusterIP 10.152.183.71 <none> 8080/TCP 118s

kube-prom-stack-kube-prome-operator ClusterIP 10.152.183.183 <none> 443/TCP 118s

kube-prom-stack-grafana ClusterIP 10.152.183.189 <none> 80/TCP 118s

kube-prom-stack-kube-prome-alertmanager ClusterIP 10.152.183.197 <none> 9093/TCP 118s

kube-prom-stack-prometheus-node-exporter ClusterIP 10.152.183.248 <none> 9100/TCP 118s

kube-prom-stack-kube-prome-prometheus ClusterIP 10.152.183.59 <none> 9090/TCP 118s

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 113s

prometheus-operated ClusterIP None <none> 9090/TCP 112s

loki-memberlist ClusterIP None <none> 7946/TCP 102s

loki-headless ClusterIP None <none> 3100/TCP 102s

loki ClusterIP 10.152.183.134 <none> 3100/TCP 102s

tempo ClusterIP 10.152.183.91 <none> 3100/TCP,16687/TCP,16686/TCP,6831/UDP,6832/UDP,14268/TCP,14250/TCP,9411/TCP,55680/TCP,55681/TCP,4317/TCP,4318/TCP,55678/TCP 100s

root@bizantum:~# microk8s kubectl get pods -n observability

NAME READY STATUS RESTARTS AGE

kube-prom-stack-kube-prome-operator-64ffd55b77-59922 1/1 Running 0 3m30s

tempo-0 2/2 Running 0 3m12s

kube-prom-stack-prometheus-node-exporter-lw2km 1/1 Running 0 3m30s

kube-prom-stack-prometheus-node-exporter-fz9d2 1/1 Running 0 3m30s

alertmanager-kube-prom-stack-kube-prome-alertmanager-0 2/2 Running 1 (3m15s ago) 3m25s

kube-prom-stack-kube-state-metrics-6c586bf4c8-k4wzv 1/1 Running 0 3m30s

loki-promtail-784k8 1/1 Running 0 3m14s

kube-prom-stack-grafana-6c47f548d6-hb9p8 3/3 Running 0 3m30s

prometheus-kube-prom-stack-kube-prome-prometheus-0 2/2 Running 0 3m24s

loki-promtail-44lvf 1/1 Running 0 3m14s

loki-0 1/1 Running 0 3m14s

# set port-forwarding to enable external access if you need

# Prometheus UI

root@bizantum:~# microk8s kubectl port-forward -n observability service/prometheus-operated --address 0.0.0.0 9090:9090

Forwarding from 0.0.0.0:9090 -> 9090

# Grafana UI

root@bizantum:~# microk8s kubectl port-forward -n observability service/kube-prom-stack-grafana --address 0.0.0.0 3000:80

Forwarding from 0.0.0.0:3000 -> 3000

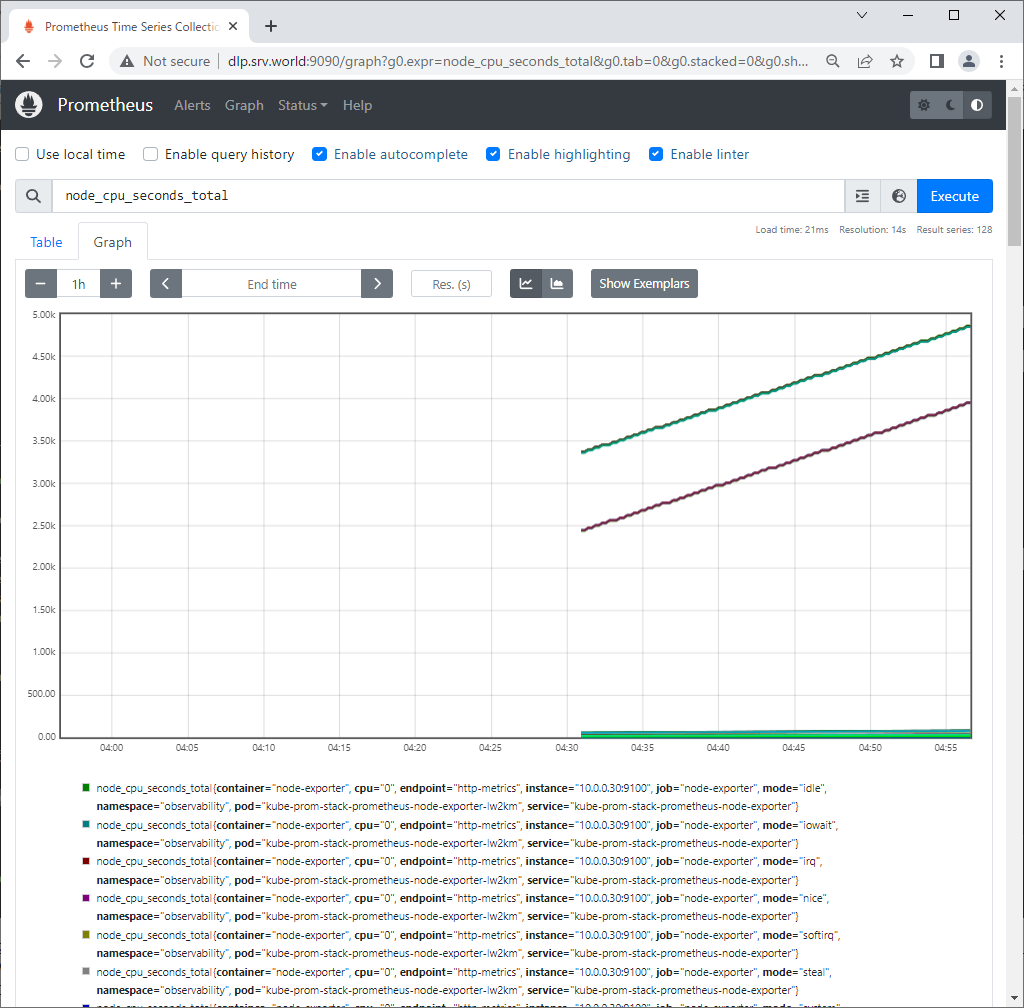

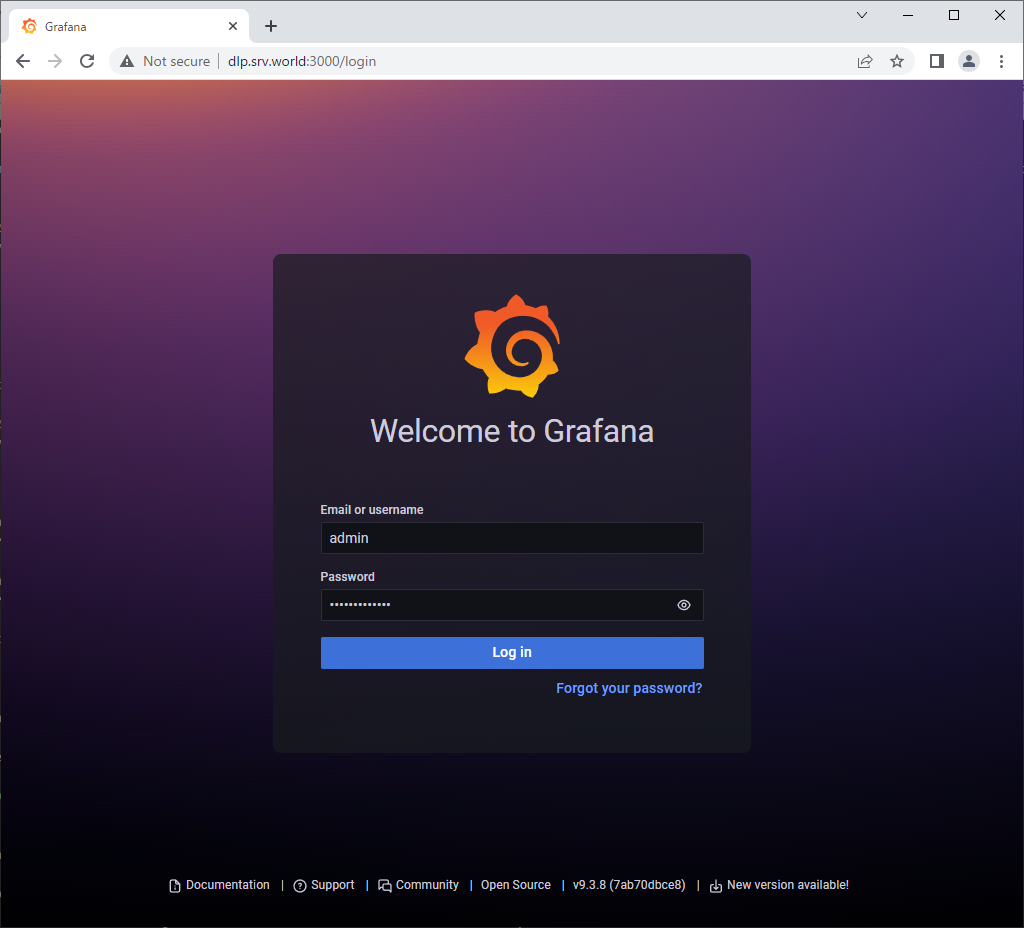

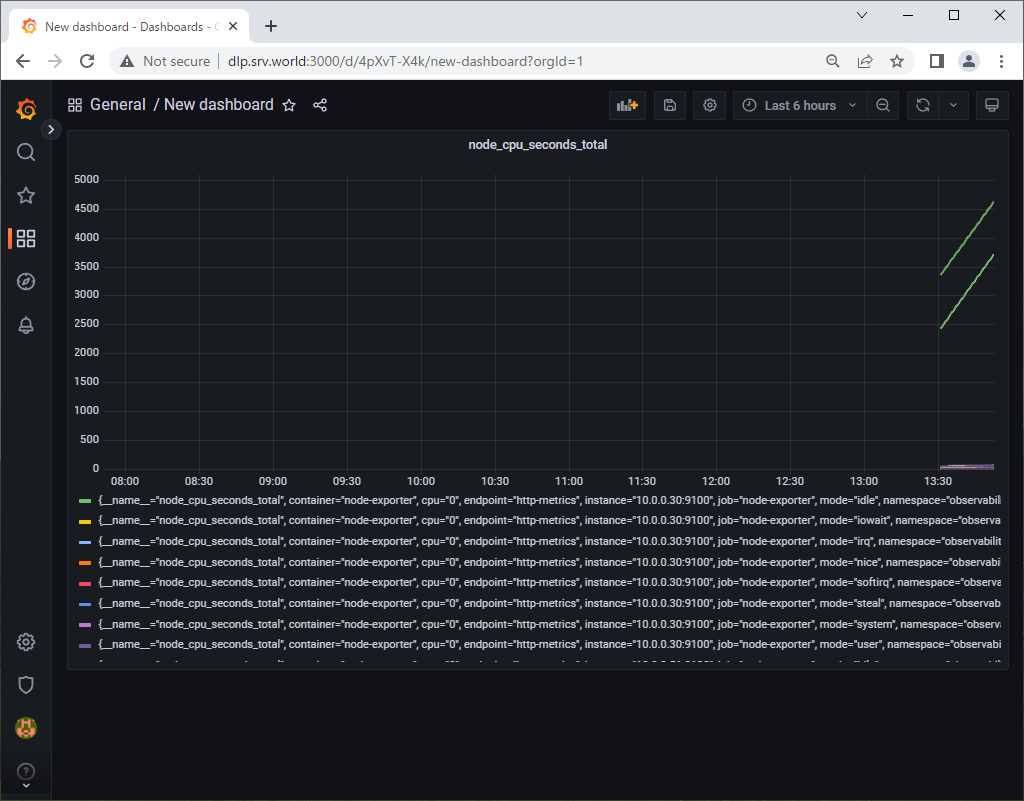

Step [2] Access to [https://(MicroK8s primary node's Hostname or IP address):(setting port)] with an web browser on a Client computer in local network. Then, that's OK if following Prometheus or Grafana UI is shown. For default user/password on Grafana, it [admin/prom-operator].

Enable Helm3

Enable Helm3 add-on to deploy apllications from Helm Charts.

Step [1]Enable built-in Helm3 add-on on primary Node.

root@bizantum:~# microk8s enable helm3

Infer repository core for addon helm3

Addon core/helm3 is already enabled

root@bizantum:~# microk8s helm3 version

version.BuildInfo{Version:"v3.9.1+unreleased", GitCommit:"52c2c02c75248ea9e5752f8dc92acad94bc7ee73", GitTreeState:"clean", GoVersion:"go1.20.4"}

Step [2]Basic Usage of Helm3.

# search charts by words in Helm Hub

root@bizantum:~# microk8s helm3 search hub wordpress

URL CHART VERSION APP VERSION DESCRIPTION

https://artifacthub.io/packages/helm/kube-wordp... 0.1.0 1.1 this is my wordpress package

https://artifacthub.io/packages/helm/bitnami-ak... 15.2.13 6.1.0 WordPress is the world's most popular blogging ...

https://artifacthub.io/packages/helm/shubham-wo... 0.1.0 1.16.0 A Helm chart for Kubernetes

.....

.....

# add repository

root@bizantum:~# microk8s helm3 repo add bitnami https://charts.bitnami.com/bitnami

"bitnami" has been added to your repositories

# display added repositories

root@bizantum:~# microk8s helm3 repo list

NAME URL

bitnami https://charts.bitnami.com/bitnami

# search charts by words in repository

root@bizantum:~# microk8s helm3 search repo bitnami

NAME CHART VERSION APP VERSION DESCRIPTION

bitnami/airflow 14.2.5 2.6.1 Apache Airflow is a tool to express and execute...

bitnami/apache 9.6.3 2.4.57 Apache HTTP Server is an open-source HTTP serve...

bitnami/apisix 2.0.0 3.3.0 Apache APISIX is high-performance, real-time AP...

bitnami/appsmith 0.3.7 1.9.21 Appsmith is an open source platform for buildin...

bitnami/argo-cd 4.7.12 2.7.5 Argo CD is a continuous delivery tool for Kuber...

bitnami/argo-workflows 5.3.5 3.4.8 Argo Workflows is meant to orchestrate Kubernet...

.....

.....

# display description of a chart

# helm show [all|chart|readme|values] [chart name]

root@bizantum:~# microk8s helm3 show chart bitnami/wordpress

annotations:

category: CMS

licenses: Apache-2.0

apiVersion: v2

appVersion: 6.2.2

dependencies:

- condition: memcached.enabled

name: memcached

repository: oci://registry-1.docker.io/bitnamicharts

version: 6.x.x

- condition: mariadb.enabled

name: mariadb

repository: oci://registry-1.docker.io/bitnamicharts

version: 12.x.x

- name: common

repository: oci://registry-1.docker.io/bitnamicharts

tags:

- bitnami-common

version: 2.x.x

description: WordPress is the world's most popular blogging and content management

platform. Powerful yet simple, everyone from students to global corporations use

it to build beautiful, functional websites.

home: https://bitnami.com

icon: https://bitnami.com/assets/stacks/wordpress/img/wordpress-stack-220x234.png

keywords:

- application

- blog

- cms

- http

- php

- web

- wordpress

maintainers:

- name: VMware, Inc.

url: https://github.com/bitnami/charts

name: wordpress

sources:

- https://github.com/bitnami/charts/tree/main/bitnami/wordpress

version: 16.1.18

# deploy application by specified chart

# helm install [any name] [chart name]

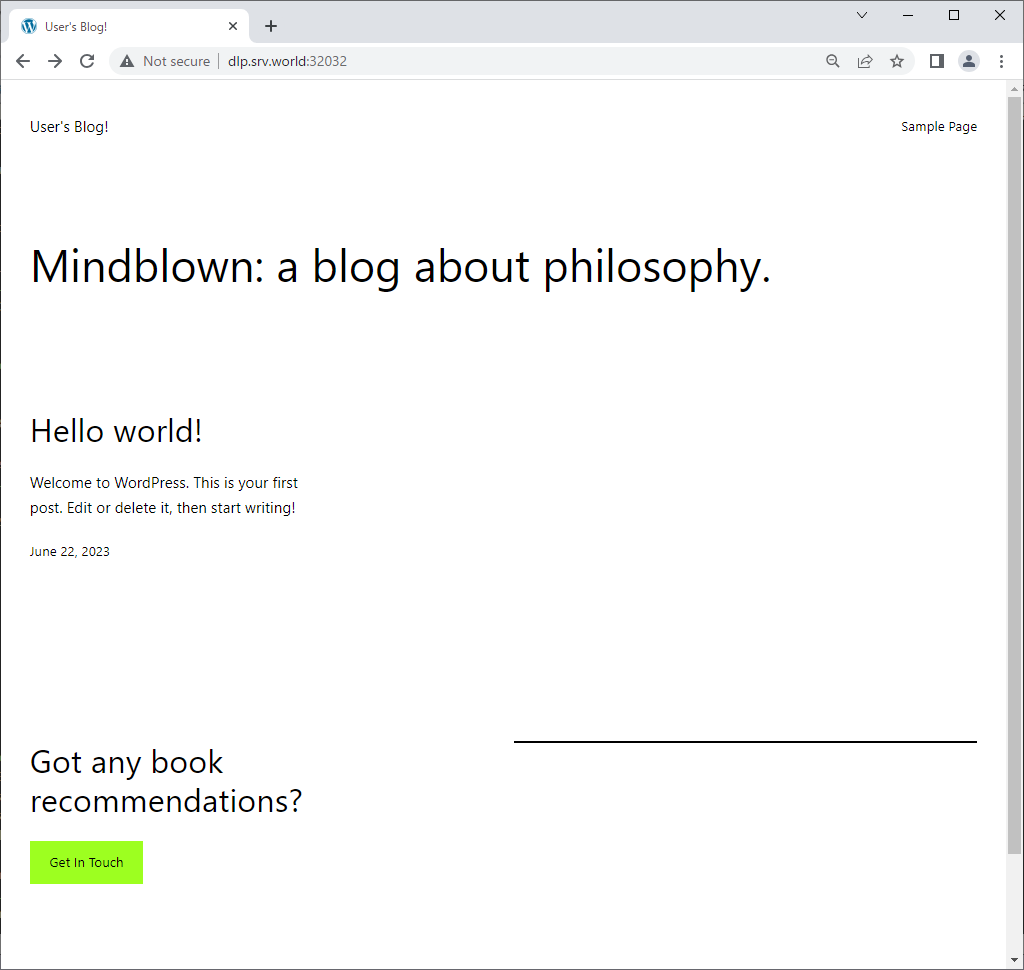

root@bizantum:~# microk8s helm3 install wordpress bitnami/wordpress

NAME: wordpress

LAST DEPLOYED: Thu Jun 22 00:04:06 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: wordpress

CHART VERSION: 16.1.18

APP VERSION: 6.2.2

** Please be patient while the chart is being deployed **

Your WordPress site can be accessed through the following DNS name from within your cluster:

wordpress.default.svc.cluster.local (port 80)

To access your WordPress site from outside the cluster follow the steps below:

1. Get the WordPress URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

Watch the status with: 'kubectl get svc --namespace default -w wordpress'

export SERVICE_IP=$(kubectl get svc --namespace default wordpress --template "{{ range (index .status.loadBalancer.ingress 0) }}{{ . }}{{ end }}")

echo "WordPress URL: http://$SERVICE_IP/"

echo "WordPress Admin URL: http://$SERVICE_IP/admin"

2. Open a browser and access WordPress using the obtained URL.

3. Login with the following credentials below to see your blog:

echo Username: user

echo Password: $(kubectl get secret --namespace default wordpress -o jsonpath="{.data.wordpress-password}" | base64 -d)

# display deployed applications

root@bizantum:~# microk8s helm3 list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

wordpress default 1 2023-06-22 00:04:06.410829446 -0500 CDT deployed wordpress-16.1.18 6.2.2

root@bizantum:~# microk8s kubectl get pods

NAME READY STATUS RESTARTS AGE

wordpress-mariadb-0 0/1 Running 0 55s

wordpress-559b788c6b-5b86g 0/1 Running 0 55s

# display status of a deployed apprication

root@bizantum:~# microk8s helm3 status wordpress

NAME: wordpress

LAST DEPLOYED: Thu Jun 22 00:04:06 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: wordpress

CHART VERSION: 16.1.18

APP VERSION: 6.2.2

.....

.....

root@bizantum:~# microk8s kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 94m

wordpress-mariadb ClusterIP 10.152.183.104 <none> 3306/TCP 6m30s

wordpress LoadBalancer 10.152.183.243 <pending> 80:32032/TCP,443:30844/TCP 6m30s

# uninstall a deployed application

root@bizantum:~# microk8s helm3 uninstall wordpress

release "wordpress" uninstalled

Horizontal Pod Autoscaler

Configure Horizontal Pod Autoscaler to set auto scaling to Pods.

Step [1]Enable Metrics Server, refer to here.

Step [2]This is an example of Deployment to set Horizontal Pod Autoscaler.

root@bizantum:~# vi my-nginx.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

run: my-nginx

name: my-nginx

spec:

replicas: 1

selector:

matchLabels:

run: my-nginx

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- image: nginx

name: my-nginx

resources:

# requests : set minimum required resources when creating pods

requests:

# 250m : 0.25 CPU

cpu: 250m

memory: 64Mi

# set maximum resorces

limits:

cpu: 500m

memory: 128Mi

root@bizantum:~# vi hpa.yml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: my-nginx-hpa

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

# target Deployment name

name: my-nginx

minReplicas: 1

# maximum number of replicas

maxReplicas: 4

metrics:

- type: Resource

resource:

# scale if target CPU utilization is over 20%

name: cpu

target:

type: Utilization

averageUtilization: 20

root@bizantum:~# microk8s kubectl apply -f my-nginx.yml -f hpa.yml

deployment.apps/my-nginx created

horizontalpodautoscaler.autoscaling/my-nginx-hpa created

# verify settings

root@bizantum:~# microk8s kubectl get pods

NAME READY STATUS RESTARTS AGE

my-nginx-65d9d7d54-r6kkx 1/1 Running 0 8s

root@bizantum:~# microk8s kubectl top pod

NAME CPU(cores) MEMORY(bytes)

my-nginx-65d9d7d54-r6kkx 0m 7Mi

# after creating, [TARGETS] value is [unknown]

root@bizantum:~# microk8s kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

my-nginx-hpa Deployment/my-nginx <unknown>/20% 1 4 1 32s

# if some processes run in a pod, [TARGETS] value are gotten

root@bizantum:~# microk8s kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

my-nginx-hpa Deployment/my-nginx 0%/20% 1 4 1 68s

# run some processes in a pod manually and see current state of pods again

root@bizantum:~# microk8s kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

my-nginx-hpa Deployment/my-nginx 108%/20% 1 4 1 12m

# pods have been scaled for settings

root@bizantum:~# microk8s kubectl get pods

NAME READY STATUS RESTARTS AGE

my-nginx-65d9d7d54-r6kkx 1/1 Running 0 12m

my-nginx-65d9d7d54-sqvd2 1/1 Running 0 17s

my-nginx-65d9d7d54-rh25s 1/1 Running 0 17s

my-nginx-65d9d7d54-6j8tv 1/1 Running 0 17s

root@bizantum:~# microk8s kubectl top pod

NAME CPU(cores) MEMORY(bytes)

my-nginx-65d9d7d54-6j8tv 0m 7Mi

my-nginx-65d9d7d54-r6kkx 0m 7Mi

my-nginx-65d9d7d54-rh25s 0m 7Mi

my-nginx-65d9d7d54-sqvd2 0m 7Mi

Dynamic Volume Provisioning (NFS)

To use Dynamic Volume Provisioning feature when using Persistent Storage, it's possible to create PV (Persistent Volume) dynamically without creating PV manually by Cluster Administrator when created PVC (Persistent Volume Claim) by users.

Step [1]Configure NFS Server on any node, refer to here. On ths example, it uses [/home/nfsshare] directory on NFS Server that is running on [nfs.bizantum.lab (10.0.0.35)].

Step [2]Install NFS Client Provisioner with Helm.

root@bizantum:~# microk8s helm3 repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/

# nfs.server = (NFS server's hostname or IP address)

# nfs.path = (NFS share Path)

root@bizantum:~# microk8s helm3 install nfs-client -n kube-system --set nfs.server=10.0.0.35 --set nfs.path=/home/nfsshare nfs-subdir-external-provisioner/nfs-subdir-external-provisioner

NAME: nfs-client

LAST DEPLOYED: Thu Jun 22 00:57:10 2023

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

root@bizantum:~# microk8s kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

.....

.....

nfs-client-nfs-subdir-external-provisioner-5447b54dbb-nwgf9 1/1 Running 0 38s

Step [3]This is an example to use dynamic volume provisioning by a Pod.

root@bizantum:~# microk8s kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client cluster.local/nfs-client-nfs-subdir-external-provisioner Delete Immediate true 35m

# create PVC

root@bizantum:~# vi my-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-provisioner

annotations:

# specify StorageClass name

volume.beta.kubernetes.io/storage-class: nfs-client

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

# volume size

storage: 5Gi

root@bizantum:~# microk8s kubectl apply -f my-pvc.yml

persistentvolumeclaim/my-provisioner created

root@bizantum:~# microk8s kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

my-provisioner Bound pvc-e296db93-8814-47e2-b49d-1fb33f897068 5Gi RWO nfs-client 5s

# PV is generated dynamically

root@bizantum:~# microk8s kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-e296db93-8814-47e2-b49d-1fb33f897068 5Gi RWO Delete Bound default/my-provisioner nfs-client 35s

root@bizantum:~# vi my-pod.yml

apiVersion: v1

kind: Pod

metadata:

name: my-mginx

spec:

containers:

- name: my-mginx

image: nginx

ports:

- containerPort: 80

name: web

volumeMounts:

- mountPath: /usr/share/nginx/html

name: nginx-pvc

volumes:

- name: nginx-pvc

persistentVolumeClaim:

# PVC name you created

claimName: my-provisioner

root@bizantum:~# microk8s kubectl apply -f my-pod.yml

pod/my-mginx created

root@bizantum:~# microk8s kubectl get pod my-mginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-mginx 1/1 Running 0 18s 10.1.40.214 node01.bizantum.lab <none> <none>

root@bizantum:~# microk8s kubectl exec my-mginx -- df /usr/share/nginx/html

Filesystem 1K-blocks Used Available Use% Mounted on

10.0.0.35:/home/nfsshare/default-my-provisioner-pvc-e296db93-8814-47e2-b49d-1fb33f897068 29303808 1290752 26499072 5% /usr/share/nginx/html

# verify accessing to create test index file

root@bizantum:~# echo "Nginx Index" > index.html

root@bizantum:~# microk8s kubectl cp index.html my-mginx:/usr/share/nginx/html/index.html

root@bizantum:~# curl 10.1.40.214

Nginx Index

# when removing, to remove PVC, then PV is also removed dynamically

root@bizantum:~# microk8s kubectl delete pod my-mginx

pod "my-mginx" deleted

root@bizantum:~# microk8s kubectl delete pvc my-provisioner

persistentvolumeclaim "my-provisioner" deleted

root@bizantum:~# microk8s kubectl get pv

No resources found in default namespace.

Step [4]To use StatefulSet, it's possible to specify [volumeClaimTemplates].

root@bizantum:~# microk8s kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client cluster.local/nfs-client-nfs-subdir-external-provisioner Delete Immediate true 22m

root@bizantum:~# vi statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: my-mginx

spec:

serviceName: my-mginx

replicas: 1

selector:

matchLabels:

app: my-mginx

template:

metadata:

labels:

app: my-mginx

spec:

containers:

- name: my-mginx

image: nginx

volumeMounts:

- name: data

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: data

spec:

# specify StorageClass name

storageClassName: nfs-client

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 5Gi

root@bizantum:~# microk8s kubectl apply -f statefulset.yml

statefulset.apps/my-mginx created

root@bizantum:~# microk8s kubectl get statefulset

NAME READY AGE

my-mginx 1/1 10s

root@bizantum:~# microk8s kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-mginx-0 1/1 Running 0 14s 10.1.40.215 node01.bizantum.lab <none> <none>

root@bizantum:~# microk8s kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-my-mginx-0 Bound pvc-02a2e4cf-dafa-4295-b003-fa13f3e09568 5Gi RWO nfs-client 63s

root@bizantum:~# microk8s kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-02a2e4cf-dafa-4295-b003-fa13f3e09568 5Gi RWO Delete Bound default/data-my-mginx-0 nfs-client 96s

- Get link

- X

- Other Apps

Comments

Post a Comment

Thank you for your comment! We appreciate your feedback, feel free to check out more of our articles.

Best regards, Bizantum Blog Team.